Since I wrote my original introductory blog post on this project, I've made considerable progress. The first prototype is now fully constructed, and I'm making significant headway in the development of the firmware. As such, I think its time for another project update.

Given that the project is being developed entirely as open-source hardware and software, the latest schematics and code can always be found in the project's Github repository.

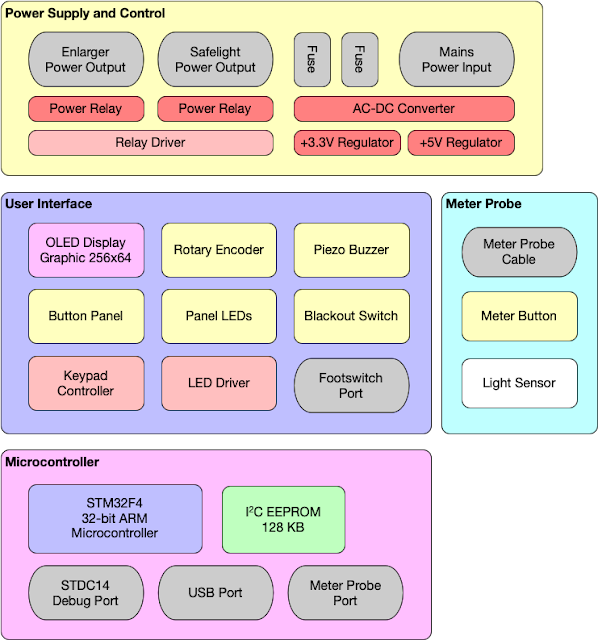

Main Unit Construction

The main unit consists of a rather large PCB, with a double-sided design. The reason it is large is that all the components and most of the ports of the Printalyzer are directly mounted to the board. The way I decided to do this, the board is actually mounted in the enclosure upside-down. This puts the buttons and display on the "bottom", while everything else is on the "top". I took this approach because several of the components are rather tall, and there wouldn't be enough clearance if they had to fit within the height limits of the buttons. From my teardowns of other pieces of darkroom electronics, this construction approach is actually quite common.

For the enclosure, I designed something using Front Panel Express and their housing profile script. While rather expensive for building in quantity, at the prototype stage this gave me very nice results.

For the top of the unit, I installed a piece of Rosco Supergel #19 (Fire Red) sandwiched between some transparent material in the gap between the top of the display and the enclosure. This both protects the display and keeps its output spectrum paper-safe.

Finding a nice metal knob for the encoder, without a pesky direction-mark, took some effort. Ultimately I went with a type of knurled guitar knob (from mxuteuk), which seems to be the magic set of keywords to find these things.

For the keycaps, I ultimately went with black. This was mostly because it was the only color for which I had all the necessary parts at the time, given that these are often backordered or special order with lead time. When looking at the unit on the desk, black also seems like the nicest choice. However, in the darkroom, they are hard to see. I also have these keycaps in dark gray, and they can be special-ordered in light gray. (Having that array of LEDs around the keys really helps, of course.)

Meter Probe Construction

The meter probe is a much simpler device. It essentially consists of a light sensor, a button, and minimalist support circuitry to handle power regulation and voltage level shifting. Right now the sensor I'm using is the TCS3472, which has well-documented lux and color temperature calculation guides and might be useful for color analysis as well.

(One benefit of my design here, is that I can make future versions of this meter probe using basically any sensor I want. The only requirement is that the sensor, or really just the front-end of this circuit board, has an I2C interface.)

Since the enclosure requires a somewhat custom shape, I had to go with 3D printing for this one. For the two prototypes I've built so far, one was self-printed and the other I had printed by Shapeways. The later looks a lot nicer, so that's what I'm showing here.

The hardest part of assembling this was probably the fiddly process of connecting the cable. For the cabling, I went with a 6-pin Mini-DIN connector. In practice, that meant finding someone selling M-M black PS/2 cables and slicing one in half.

One last thing this meter probe needs, is some sort of cover for the sensor hole. My plan is to have something printed/cut for me out of something like polycarbonate, from a place that makes panel graphic overlays. Ideally this overlay will be light in color, with markings pointing to the sensor hole. Thus far I've been putting off this part, but getting it in place is going to be essential to finalizing my calibration of the sensor readings.

Firmware Development

I'm now at the stage in firmware development where I have all the hardware inside the Printalyzer working, and have a stable base from which to build up the rest of the device's functionality. My initial goal here is to fully flesh out the functionality of a mostly-full-featured f-stop enlarger timer, then to later follow on with the exposure metering functions. I'm going about it this way because metering requires a lot of testing that's hard to do "outside the darkroom," and I'll need the timing functions regardless.

(In all the screenshots below, I apologize for the "gunky" picture quality. The red gel sandwich over the display tends to reflect a fair bit of dust, which ends up looking far more distracting in pictures than it does in real life.)

Home Display

The home display shows the current time and selected contrast grade. It also has a reserved space for the tone graph. While I have figured out what that graph will look like, its not easy to show here until I do actual metering.

From this display its also possible to change the adjustment increment, and obviously to start the exposure timer.

Another feature of the home display (not shown here) is fine adjustment. Using the encoder knob you can enter an explicit stop adjustment (in 1/12th stop units), or even an explicit exposure time. I expect this capability to be useful for repeating previous exposures, in lieu of re-metering.

Test Strip Mode

The test strip mode supports both incremental and separate exposures for test strips, and making strips in both 7-patch and 5-patch layouts. The design places the current exposure time in the middle, and then makes patches above and below this time in units of the current adjustment increment.

Another nice feature, made possible by having a graphical display, is that it actually tells you what patches should be covered for the next exposure. This way its harder to lose your place during test strip creation.

Settings Menu

The settings menu is fairly rough at the moment, and probably does need some user interface improvements. That being said, it shows another benefit of using a reconfigurable graphical display. I can show nice text menus that actually tell you what each setting is, and then return to a full-screen numerical time display when done.

Enlarger Calibration

This little tidbit is the first feature I've worked on so far that actually uses the sensor in the meter probe. Its not yet complete, from a user-interface and settings point of view, but the underlying process is fully implemented now.

The basic issue is that any enlarger timer is effectively just "flipping a switch." It can control when that switch turns on, and when it turns off, but little else. Unfortunately, lamps typically do not turn on or off instantly. On both ends, there is a delay and a ramp time. While these are typically short, not accounting for them can cause consistency issues with short exposures. This problem becomes especially troubling when doing incremental short exposures, such as with test strips and burning.

The goal of the calibration process is to make sure that the "user visible exposure time" is the time the paper is exposed to the equivalent of the full light output of the enlarger, rather than simply the time between the on and off states of its "power switch."

Calibration works by first measuring reference points with both the enlarger on (full brightness) and the enlarger off (full darkness). It then runs the enlarger through a series of on/off cycles while taking frequent measurements. When complete, it generates 6 different values:

- Time from "power on" until the light level starts to increase

- Time it takes the enlarger to reach full brightness (rise time)

- Full brightness time required for an exposure equivalent to what was sensed during the rise time

- Time from "power off" until the light level starts to decrease

- Time it takes the enlarger to become completely off (fall time)

- Full brightness time required for an exposure equivalent to what was sensed during the fall time

These values are then fed into the exposure timer code, and used to schedule the various events (on, off, tick beeps, displayed time, etc) that occur during exposure.

Using the above numbers as a rough example of what this all means, without profiling a simple 2 second exposure would actually expose the paper to light for about 2.4 seconds but only a 1.88 second equivalent exposure.

These numbers come from a simple halogen desk lamp, which is more convenient for basic testing. I've also run the same calibration cycle on my real enlarger. It takes longer to rise to full brightness, but is faster at turning off. (Its similar case is 2.2 seconds with a 1.78 second equivalent exposure.)

Now I'll admit this doesn't seem like much, but enlargers do vary and it can suddenly become critical if you're making <1s incremental exposures for test strips or burning.

Blackout Mode

This feature is a little "quality of life" nicety that I haven't seen anyone else do. Every once in a while, some of us want to print on materials, such as RA-4 color paper, that has to be handled in absolute darkness. This means once your enlarger is all setup for the print, you need to turn out all of the lights in the darkroom before taking out the paper. Having to manually turn off your safelights and throw a cover over your illuminated enlarger timer, gets a bit annoying.

This is why I added the "Blackout" switch to the Printalyzer! Flipping this switch turns off the safelight and all of the light-emitting parts of the device. Of course everything still works when in this mode, so you can still make prints and test strips in complete darkness. (Eventually I'll probably add additional audio cues and lock some ancillary features to improve usability.)Next Steps

At the outset of this project, I collected a long list of "good ideas" for things the device should do. That list is still growing, of course. Eventually I will get to them, but right now my priority is to get the standard features implemented and stable.

I have a few more things to do for the basic f-stop timer functionality, such as burn exposure programs. After that, I need to work on an actual user interface for adding/changing/managing enlarger profiles.

Once these things are done, I'll finally move on to paper profiles and print exposure metering. I expect that to be a long-tail item, because it will require a lot of work on sensor calibration and learning about sensor behaviors. However, I plan to do as much this as possible via first converting raw sensor readings into stable and standardized exposure units. This way, it will be possible to experiment with different sensors (as needed) without dramatic changes to how the metering/profiling process works.

Another thing I'll likely do interspersed with this, is start to take advantage of the USB port I put onto the device. There are a few features I want to implement with this, including:

- Backup/Restore of user settings and profiles with a thumbdrive

- Firmware updates with a thumbdrive (right now it requires a special programmer device, which isn't practical for "end user" use).

- Keyboard entry of profile names (I'll make sure they can be entered without a keyboard, but being able to use one is a nice thing.)

- Connection to densitometers to help with paper profiling (not sure how this will work, but it can't hurt to try).

I'm still not sure whether, or at what point, I'll proceed with transforming this project into an actual "product" or "kit." The biggest hangup is really that the device necessarily plugs into mains power and switches mains-powered devices. That means it may be hard to safely sell/distribute units without first forming a company and going through various painful and expensive certification processes. However, I'm not going to worry too much about that until I have something rather complete.

Regardless, all the necessary data to build one yourself will always be freely and publicly available. However, actually assembling one of these does require circuit board assembly skills and tools everyone isn't likely to have.